Instructors Only

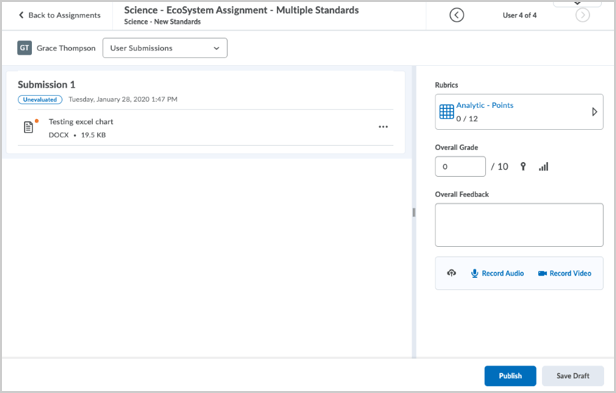

Dropbox- Consistent evaluation experience

The consistent evaluation experience for Dropbox brings consistency to evaluating in Brightspace Learning Environment for all assessable tools . This feature provides an opt-in experience for users to familiarize themselves with the new experience when evaluating in Dropbox. In future releases this evaluation experience will become available in additional tools.

The default value for this configuration is On(Opt-In).

No changes have been made to page navigation. The user context bar now includes a menu to choose a learner, and another menu to choose a submission. On the user submission list, files are now grouped by submission without repeating comments to make it easier to distinguish between submissions. The evaluation pane divider is now draggable to suit a user’s preferences and screen size. Rubrics are now located at the top of the page, and assignment details are in the ellipsis menu. The overall grade remains as an icon that indicates if that grade is tied to the gradebook. Existing outcomes and competency web components are also visible. Publish, Save Draft, and Next Student options continue to function as before.

Instructors should wait to turn on the Consistent Evaluation experience in this release if they:

- Use TurnItIn – No integration with Turnitin is available in this release of the consistent evaluation experience

- Use Anonymous Marking/Grading – Learner name display does not respect the Anonymous Marking setting

- May have multiple rubrics attached to assignments – Only one rubric displays in the consistent evaluation experience

- Use large rubrics with many levels or criteria – Large rubrics may have a reduced usability due to the way in which the new experience displays rubrics. All levels and criteria display, though large rubrics may require more scrolling

- Use Group assignments extensively – There is no access to view group members from within the consistent evaluation experience

- Use the Edit a Copy function to annotate text or HTML submissions – This option is not available in the consistent evaluation experience

- Require access to Student ID (or Class Progress) while evaluating – These options are not accessible from within the consistent evaluation experience

These items will be addressed in future updates to the Consistent Evaluation experience.

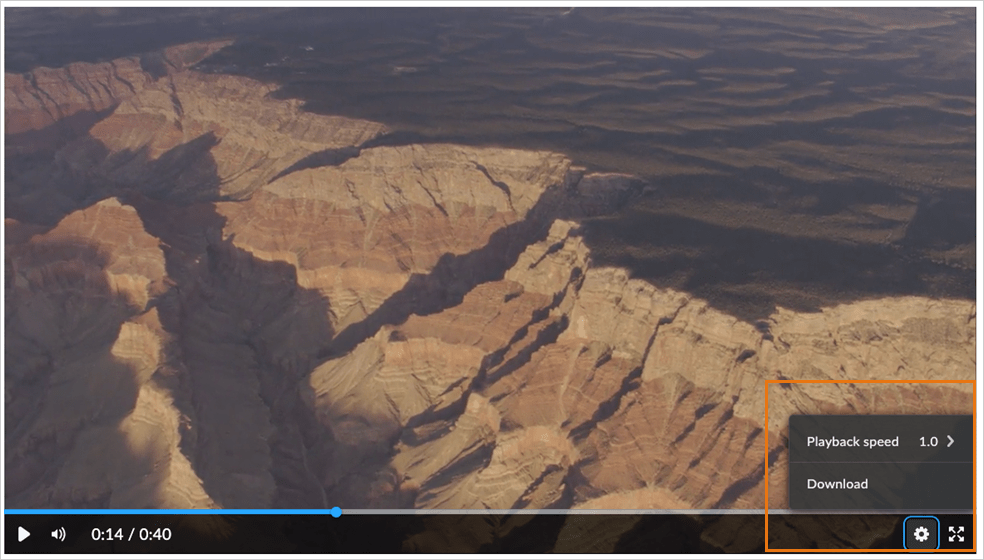

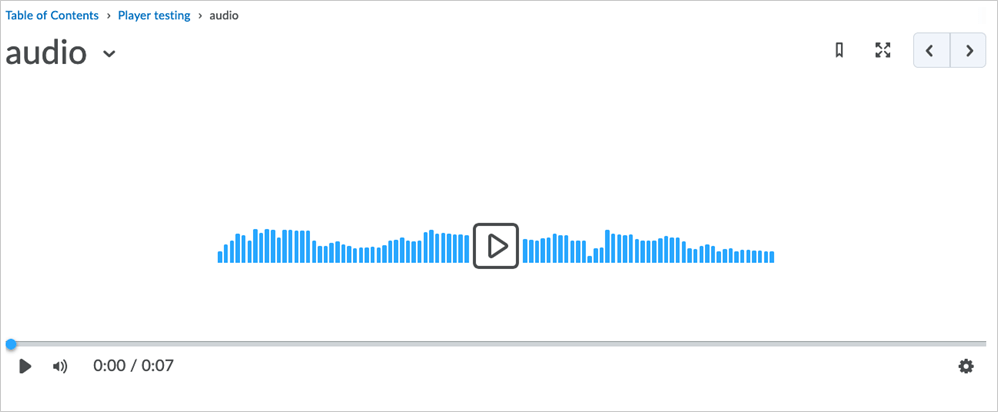

CourseLink – Updated video and audio player in Content

To improve accessibility and create a consistent user experience, a new audio and video media player has been added to Content.

The new media player offers the following features:

- Consistent keyboard controls, and screen reader support.

- Keyboard controls improvements and fixes to known issues. The previous media player had an issue where keyboard focus could become stuck in the caption language selection menu.

- Screen reader improvements with the new media player include off-screen messages for screen readers to announce when a video has loaded or if there was an error loading the video.

Note: Download will not be turned on by default.

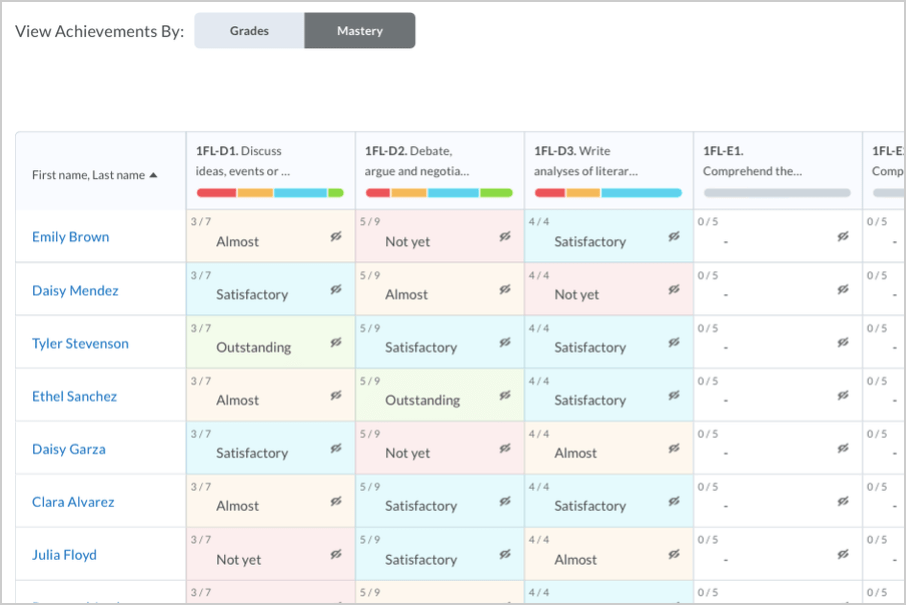

Grades – Mastery view in the Gradebook now available

The Mastery view of the Gradebook provides visibility into additional details of learner performance beyond what is available using traditional grades associated with an activity. Using outcomes associated with various types of assessments (such as quiz questions, rubric criterion rows, assignments or discussions), additional dimensions of performance can be recorded. The Mastery page provides instructors with information about the overall achievement for leaners in their course. The achievement is related to learning outcomes and standards associated to assessment activities and helps instructors to quickly identify learners who are potentially at risk. The page provides a quick holistic view of aggregated performance across all learners in the course for all outcomes assessed in the course. Instructors can click on individual table cells of the Mastery view to drill-down into individual learners’ performance and view evidence associated with each outcome. In that detailed view, instructors can use their professional judgement to manually override the calculated suggested level of overall achievement.

Note: This option is hidden if learning outcomes are not being used in the course.

The Mastery view table displays evaluated outcomes as the columns of the table and learners enrolled in the course as rows of the table. In the columns for each outcome, there is a color and text indication of the suggested overall level of achievement based on the currently selected calculation method. The color of each cell and the text label is based on the color and level name defined in the default Achievement Scale in the Learning Outcomes tool.

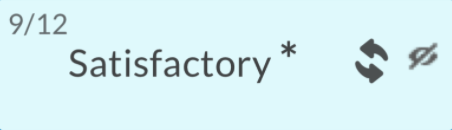

Within each achievement cell, there are several indicators of the status of the learner’s progress toward the achievement, including:

- Number of activities evaluated (out of the total aligned)

- Suggested overall level achievement (or manually overridden level of achievement)

- Manual override indicator in the form of an asterisk beside the overall achievement label.

- Out of Date icon (if newer evaluations have been made since a manual override was recorded or a feedback is added)

- Published/Not published icons to indicate the visibility status to the learner

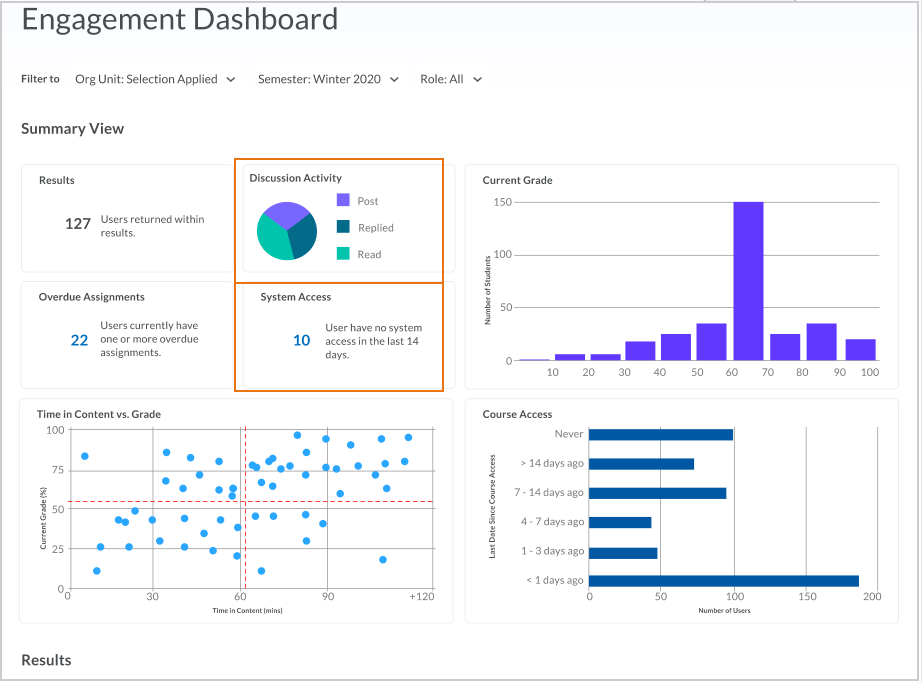

Insights – Additional cards on the New Engagement Dashboard

The new Engagement dashboard contains visualizations that provide insight into how users are engaging with their courses. Using this information, users can identify at-risk learners and intervene to get them back on track. To increase the value of the Engagement dashboard, originally released in March 2019, and updated in November 2020, this release updates and adds the following new features:

- The Discussion Summary pie chart indicates the number of discussion threads created, replied to, and read. If you hover over a pie segment, a popup appears with a number and description of the segment. This is helpful for understanding the proportion of passive or active social engagement. If you click a segment, the rest of the Engagement dashboard automatically filters the returned data for the learners represented by the segment. This is also summarized in the results table.

- The System Access summary card indicates the number of users who have not accessed the system in the previous 14 days. This can include access of Brightspace Pulse or Brightspace through their internet browser. This is also summarized in the results table.

- The ability to take action from the dashboard by exporting the results table or emailing one or many users. Users require permission to see and send emails at the Org Level in order to take advantage of the email option.

Note: The default setting for this feature continues to be Opt-in.

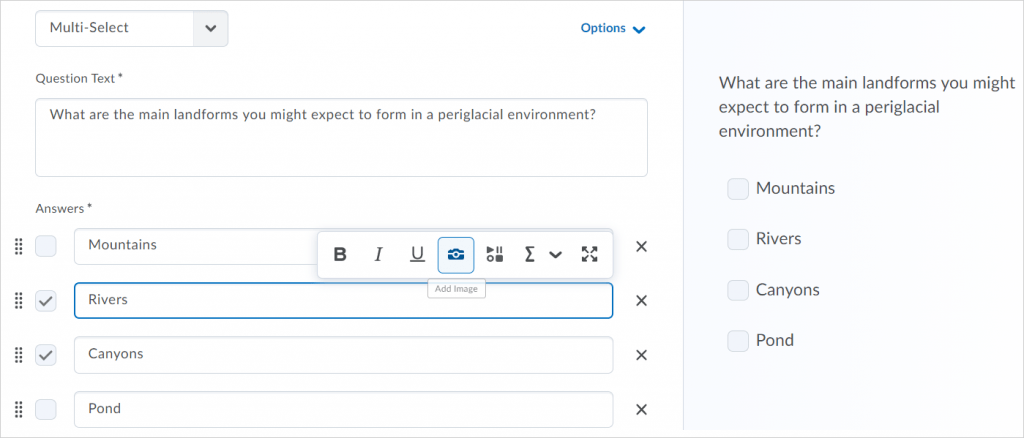

Quizzes – Improved workflow for creating multi-select questions

As part of the ongoing initiative to improve the quiz creation experience for instructors, this release streamlines the interface for creating multi-select questions, making the workflow simpler and more intuitive.

When instructors initially launch the Question Editor to create a multi-select question, the interface displays the two main components of a multi-select question: the question and potential answers. Selecting a field displays a pop-up toolbar for formatting the text and adding images, links, and graphical equations. As each field is completed, the preview pane displays how the question and answers appear to learners.

Next, instructors can choose to click Options to add the following optional information to the multi-select question: Add Feedback, Add Hint, Add Short Description, and Add Enumeration.

Instructors can then choose to randomize the order of answers, assign points, and determine how points are assigned to blanks.

For determining how points are assigned to blanks, a new grading type is available in the classic and new multi-select question experience: Correct Answers, Limited Selections. For this grading type, points are evenly distributed across correct answers only. The number of selections allowed is limited to the number of correct answers. Learners earn partial points for each correct answer selected.

Quizzes – Improvements to the ability to retake incorrect questions in quizzes

Building on the previous Quizzes – Retake incorrect questions in subsequent attempts, there are several improvements to increase the value of the feature:

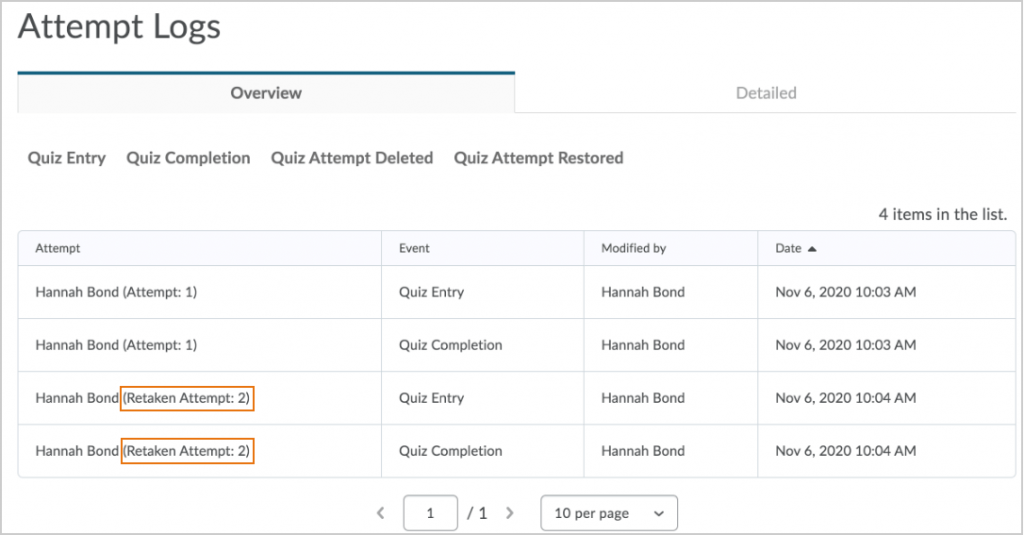

- Instructors can now identify retaken attempts in the Attempt log.

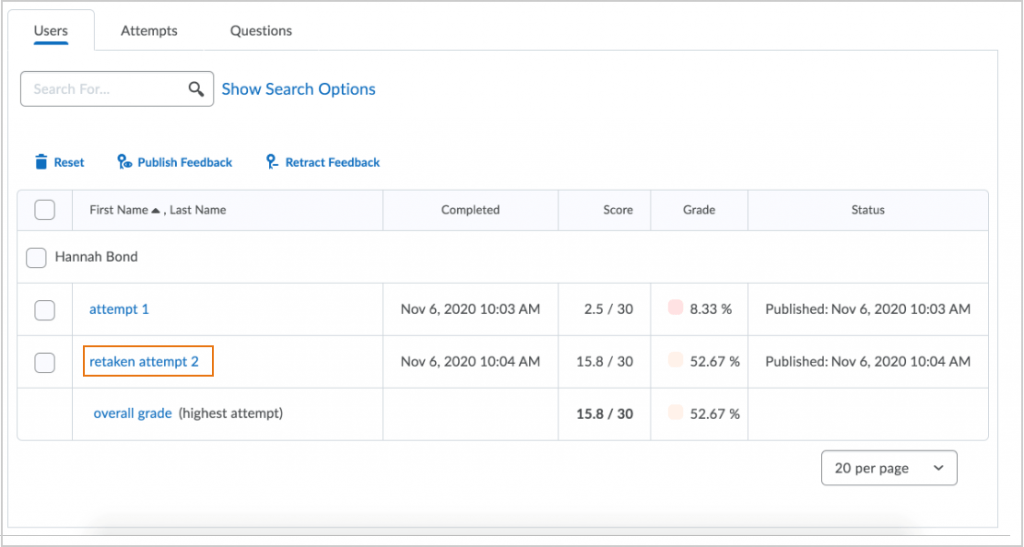

- Instructors can now identify a Retaken Attempt while grading a quiz in the Quizzes tool on the User tab and the Attempts tab.

Contact

If you have any questions about the updates, please contact CourseLink Support at:

courselink@uoguelph.ca

519-824-4120 ext. 56939