Students and Instructors

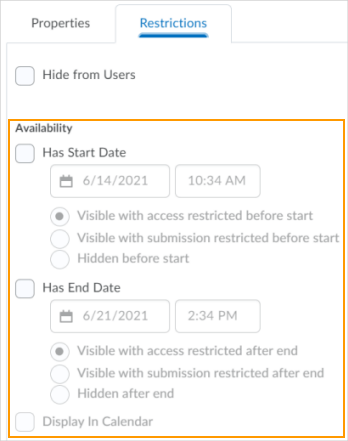

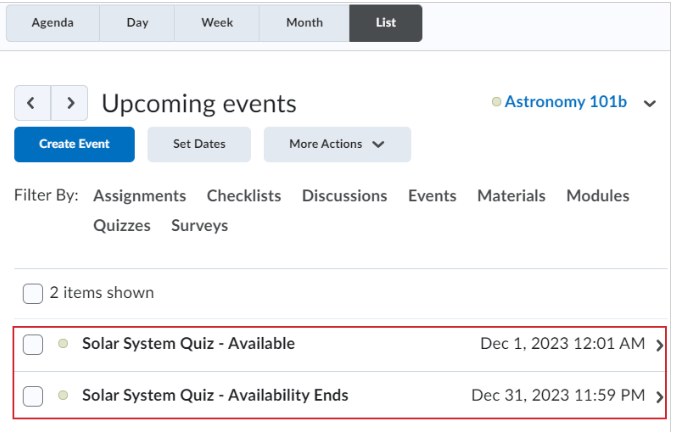

Calendar – View quiz start and quiz end dates as separate events in Calendar

When an instructor adds a Start Date and End Date in Quizzes and then selects Add Availability Dates to Calendar, both dates are displayed in the Calendar tool as separate events. Previously, only one event displayed showing when the availability of that quiz ended.

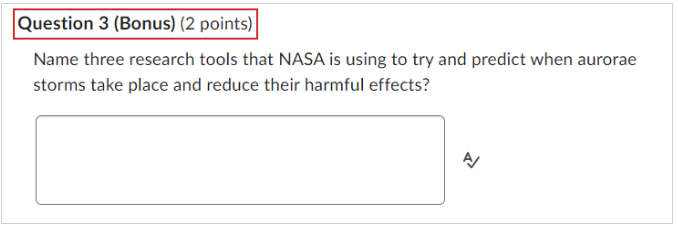

Quizzes – Identify bonus questions in a quiz

Quizzes now clearly mark bonus questions with Bonus, improving learner understanding and addressing a previous lack of indication in Quizzes.

Instructors Only

Announcements – Copy announcements to other courses in published state

The ability to copy announcements to other courses was originally released for the Announcements tool in November 2023.

As of this release, users with proper role permissions can publish copied announcements in other courses using either the Announcements tool or widget. This is done by selecting the Copy to Other Courses option from the announcement’s context menu. In the Copy Announcement window, there is a new check box option labeled Publish Announcement on Copy. When this box is selected, the announcement is directly published in the destination course or courses, skipping the draft stage. If the check box is not selected, the announcement copies as a draft into the destination course or courses.

Previously, copied announcements appeared in draft form and required publishing in the destination course to complete the process.

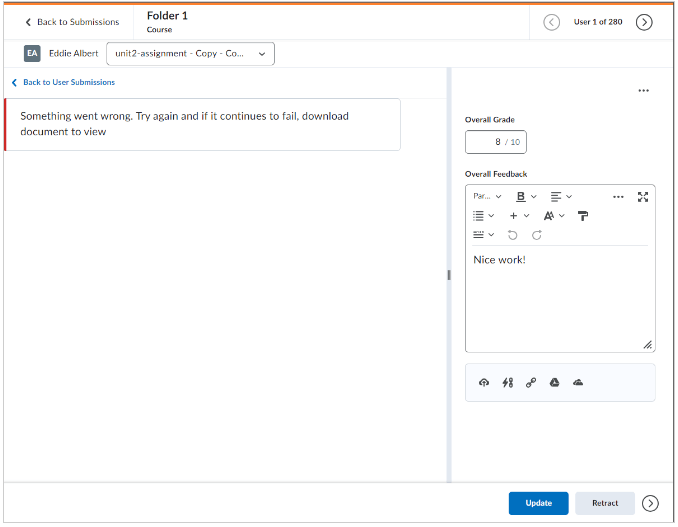

Dropbox – Interface improvements for annotations

CourseLink is updating the Annotations tool in Dropbox to be a web component, as part of our internal technical maintenance work. As a result, the user interface for the annotations viewer is improved to include the following changes:

- Error state includes updated dialog language.

- Document conversion state now has in-page messaging and a loading icon. Previously, there was a dialog displaying the messaging.

- General loading state now has a loading icon. Previously, there was no loading icon.

- Note annotations and their print format show annotations instead of just the annotations icon.

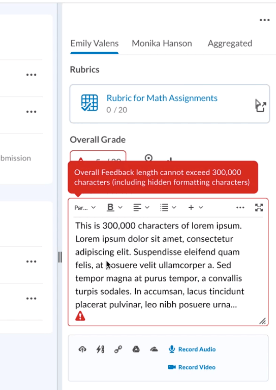

Dropbox and Discussions – Feedback field limitations for evaluation experiences

The Dropbox and Discussions tools’ evaluation feedback field is changing from a 500,000 character limitation to a 300,000 character limitation, resulting in an updated dialog warning appearing when the user hits 300,000 characters.

The character limit update improves performance and security for evaluations. Previously, when a user would enter over 300,000 characters, the new information may not have been saved. This potential save failure resulted in the loss of anything entered between 300,000-500,000 characters.

Discussions – New Discussion Topic Editing Experience universally enabled

With this release, the New Discussion Topic Editing Experience, which was introduced in the December 2022 release.

The New Discussion Topic Editing Experience is set to on as the default with no option to opt out, and the Legacy Discussion Topic Editing Experience is no longer available after this release.

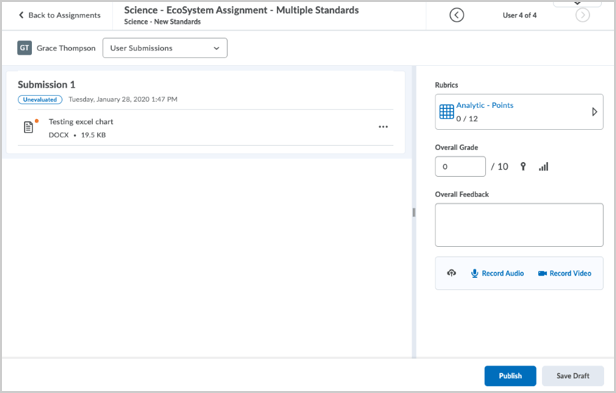

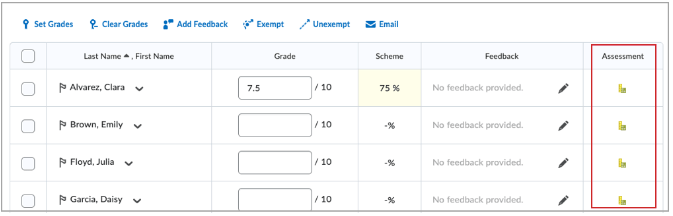

Rubrics – Grades tool now uses Consistent Evaluation interface for assessments

When an evaluator assesses a Dropbox submission or discussion in the Grades tool, the rubric now uses the Consistent Evaluation interface whether there is work submitted for evaluation. This is consistent with the assessment experience for Dropbox or Discussions in other areas of CourseLink.

Previously, evaluations for these types of activities used a pop-out Rubric grid when launching from the Grades tool. When users click the drop-down menu on the column header to enter grades, the rows with un-submitted work now use the Consistent Evaluation experience instead of the previous Rubric pop-out.

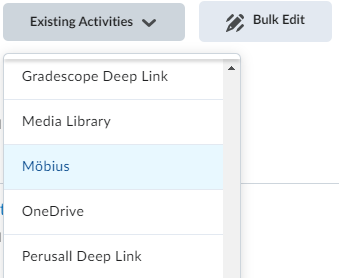

Reminder: Möbius – Upgrade CourseLink integration

On December 22, the current deprecated Möbius integration (LTI 1.1) will be removed. The new Möbius integration (LTI 1.3) has been made available. The new integration has been added to Content > Existing Activities > Möbius.

Please note: The integration will not automatically update. Instructors must select the new LTI 1.3 version from Content menu. The old version links will be removed.

Please contact CourseLink Support if you need assistance with adding the new LTI 1.3 integration.

Reminder: Macmillan – Upgrade CourseLink integration

On December 22, the current deprecated Macmillan integration (LTI 1.1) will be removed. The new Macmillan integration (LTI 1.3) has been made available.

The integration is available under two link options:

- Content > Existing Activities > Macmillan Learning Content (Deep Linking Quicklink)

- Content > Existing Activities > External Learning Tools > Macmillan Learning Course Tools (Basic Launch)

For more information about using the Macmillan CourseLink integration, please review the Macmillan (LTI 1.3) integration support materials.

Please note: The integration will not automatically update. Instructors must select the new LTI 1.3 version from Content menu. The old version links will be removed.

Please contact CourseLink Support if you need assistance with adding the new LTI 1.3 integration.

Reminder: Panopto – Upgrade Guelph-Humber CourseLink integration

On December 22, the current deprecated Panopto integration (LTI 1.1) will be removed. The new Panopto integration (LTI 1.3) will be made available.

The integration is available under three link options:

- GH Panopto Deep Linking Quicklink (any quicklink location)

- Content > Existing Activities > External Learning Tools > GH Panopto Launch (Basic Launch)

- GH Panopto Deep Linking (editor Insert Stuff video link)

Please note: The integration will not automatically update. Instructors must select one of the new LTI 1.3 links. The old version links will be removed.

Please contact CourseLink Support if you need assistance with adding the new LTI 1.3 integration.

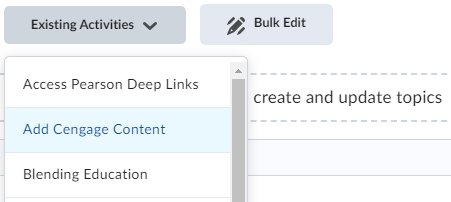

Reminder: Cengage – Upgrade CourseLink integration

On December 22, the current deprecated Cengage integration (LTI 1.1) will be removed. The new Cengage integration (LTI 1.3) has been made available. The new integration has been added to Content > Existing Activities > Add Cengage Content.

Please note: The integration will not automatically update. Instructors must select the new LTI 1.3 version from Content menu. The old version links will be removed.

Please contact CourseLink Support if you need assistance with adding the new LTI 1.3 integration.

Contact

If you have any questions about the updates, please contact CourseLink Support at:

courselink@uoguelph.ca

519-824-4120 ext. 56939